Image moderation now available

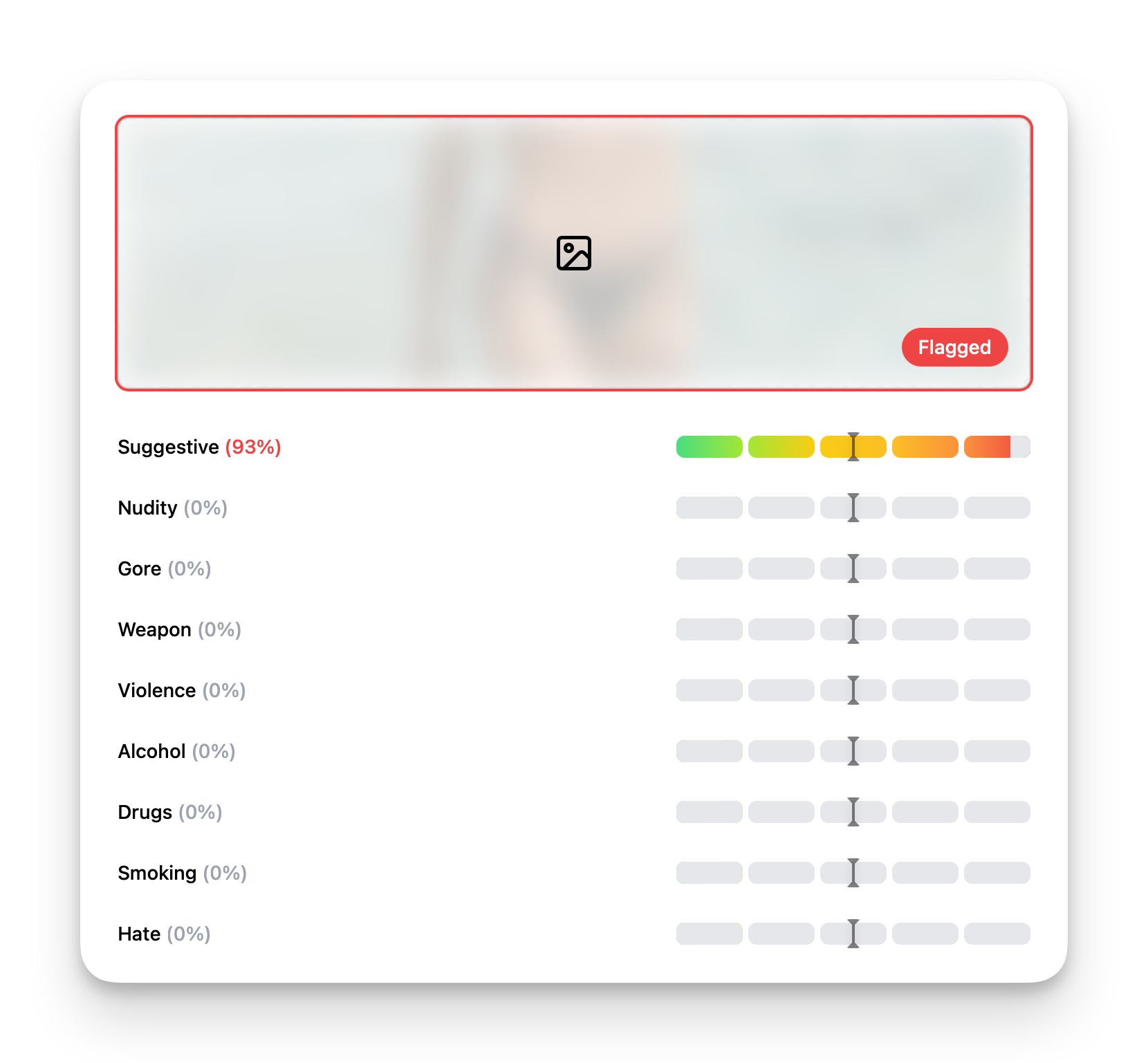

I'm excited to announce that you can now moderate images with moderation API. Setting up image moderation works similarly to text moderation. You can adjust thresholds, and disable labels that you do not care about when flagging content.

We offer 9 different labels out of the box - all available through a single model.

- Nudity: Exposed male or female genitalia, female nipples, sexual acts.

- Suggestive: Partial nudity, kissing.

- Gore: Blood, wounds, death.

- Violence: Graphic violence, causing harm, weapons, self-harm.

- Weapon: Weapons either in use or displayed.

- Drugs: Drugs such as pills.

- Hate: Symbols related to nazi, terrorist groups, white supremacy and more.

- Smoking: Smoking or smoking related content.

- Alcohol: Alcohol or alcohol related content.

In case you need any other labels, please let us know.

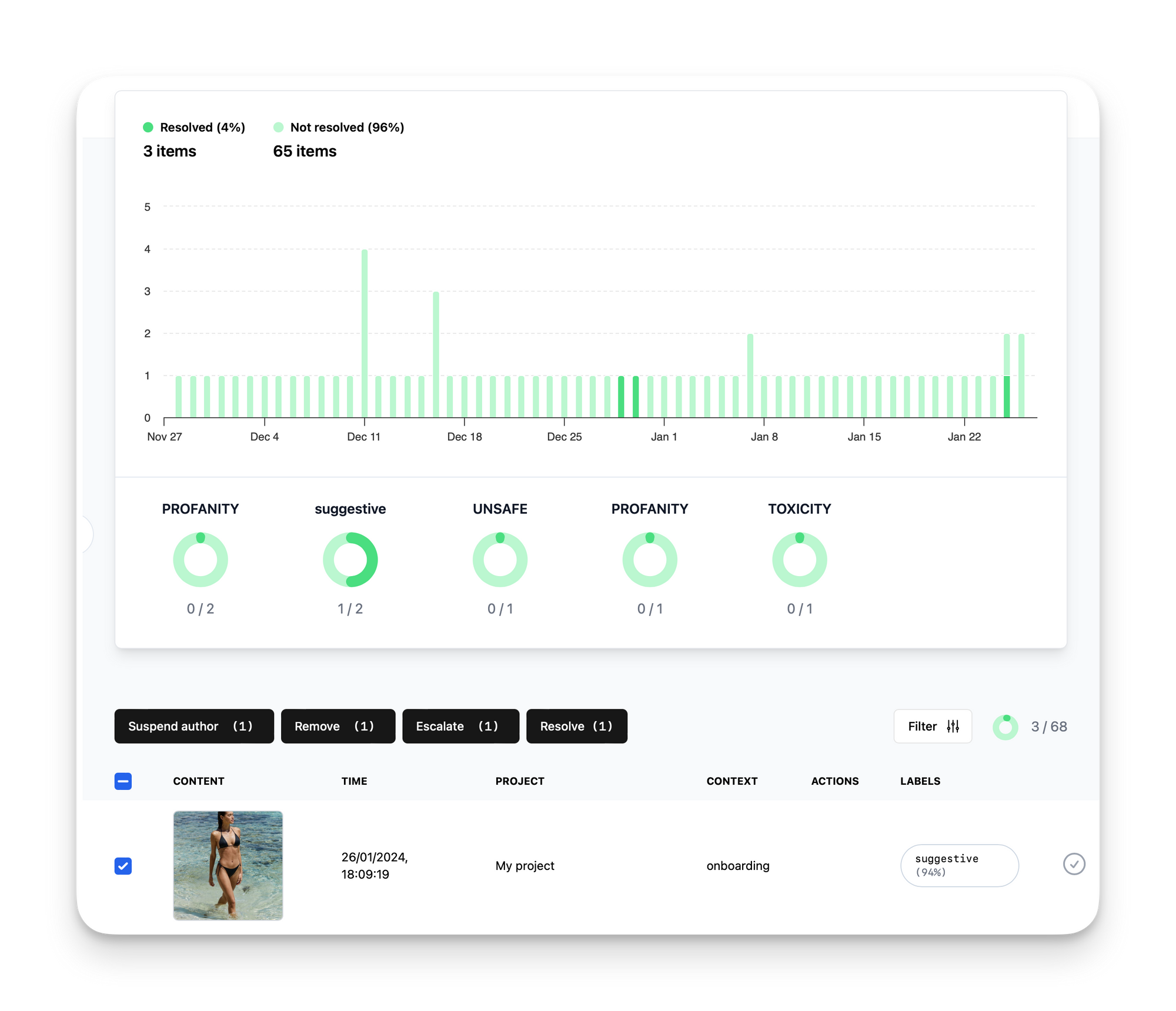

Support in the review queue

Image moderation is also working seamlessly with the content review queue. Here you'll be able to see a small preview of the images, and otherwise perform actions just as you do with text.

New endpoint

The new capabilities are used via a new endpoint for image moderation.

You can find the API reference here:

Analyze image - Moderation API

Or use our Typescript package from NPM here:

@moderation-api/sdk

Automatically moderate your content with Moderation API. Latest version: 1.1.1, last published: a day ago. Start using @moderation-api/sdk in your project by running `npm i @moderation-api/sdk`. There are no other projects in the npm registry using @moderation-api/sdk.