Updates

Product updates

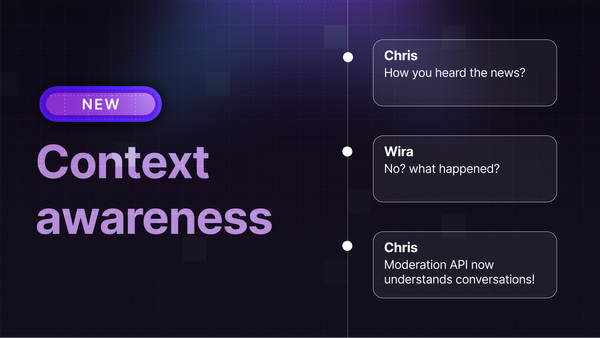

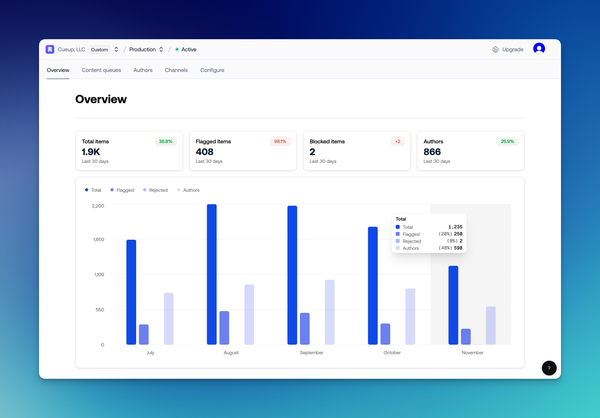

We've shipped two huge upgrades to make Moderation API faster to set up, richer in signal, and easier to maintain - and next week we’re launching something new every day (Dec 8-12). Revamped dashboard Next time you sign in, the dashboard will feel very different. We rebuilt